CRAM format notes

Life as a Bioinformatics Freelancer: The tools

Life as a Bioinformatics Freelancer: Finding work

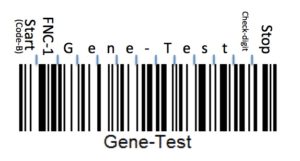

Encoding information with barcodes

- « Previous Page

- 1

- 2

- 3

- 4

- 5

- …

- 10

- Next Page »